Navigating the Challenges of Sparse Datasets in Machine Learning

Sparse datasets are ubiquitous in the machine learning landscape, and navigating the challenges they present is crucial for developing robust and efficient models. In this blog post, we’ll delve into why sparse datasets can cause poor performance in some machine learning algorithms, explore solutions to overcome these challenges, and provide code snippets for a hands-on understanding.

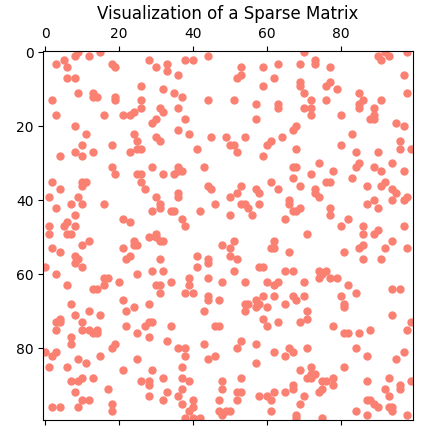

Understanding Sparse Datasets

Sparse datasets are characterized by having a large proportion of missing or zero values. This sparsity can result from various scenarios, such as user-item interactions in recommendation systems or word occurrences in text data. While handling sparse data can be intricate, understanding its challenges is the first step towards crafting efficient solutions.

|

|

|---|

Challenges Posed by Sparse Datasets

-

Insufficient Information: Learning meaningful patterns becomes difficult due to the scarcity of non-zero values.

-

High Dimensionality: The curse of dimensionality can affect distance-based algorithms by distorting the meaningfulness of distances between data points.

-

Overfitting: The model might capture noise as patterns, resulting in poor generalization to unseen data.

-

Computational Inefficiency: Some algorithms struggle with processing high-dimensional sparse data efficiently.

-

Imbalance: Sparse datasets might introduce class imbalance, leading to biased models.

-

Feature Importance: Determining which features are informative is challenging in sparse scenarios.

-

Distance Measures: For algorithms that rely on distance measures, such as k-nearest neighbors (KNN) or support vector machines (SVM), sparse datasets can distort distances between data points, making it difficult to find similarities and differences.

Strategies to Overcome the Challenges

Dimensionality Reduction

PCA (Principal Component Analysis) can help in reducing the feature space while retaining the most important information.

|

Imputation

Filling missing values based on certain strategies, such as mean imputation, can mitigate the impact of sparsity.

|

Feature Selection

Retaining only the most informative features can lead to improved model robustness.

|

The target_variable is the dependent variable we are trying to predict in a supervised learning task. In the context of the code snippet, it should be the label or the output corresponding to each data point (row) in your sparse_matrix.

In this example, target_variable is generated randomly, assuming a binary classification task. In a real-world scenario, target_variable would contain the actual labels of your data points. For each row in your sparse_matrix, there should be a corresponding label in target_variable.

Regularization

L1 and L2 regularization can prevent overfitting by penalizing large coefficients.

|

Ensemble Methods

Random Forests, an ensemble method, can aid in improving generalization and managing overfitting.

|

Conclusion

While sparse datasets pose several challenges in machine learning, ranging from high dimensionality to overfitting, a variety of strategies and techniques exist to navigate these issues. By adopting appropriate methods such as dimensionality reduction, imputation, and regularization, we can harness the potential of sparse data and build effective and robust machine learning models.

Navigating the Challenges of Sparse Datasets in Machine Learning

https://karobben.github.io/2023/09/27/Python/sparse-datasets/